Datactics Experiments with Knowledge Graphs

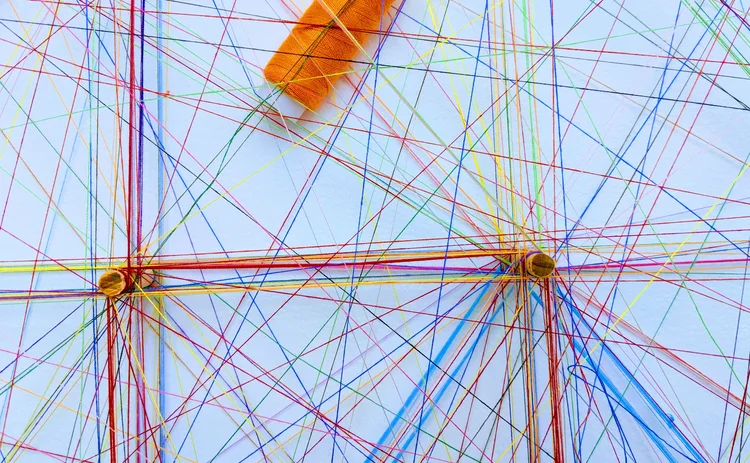

The company aims to show that pairing good quality data with knowledge graphs can lead to links that previously would have been missed.

Belfast-based Datactics is experimenting with knowledge graphs, which are becoming increasingly popular among financial services firms, and could improve a firm’s downstream data analysis, such as for fraud detection.

It is working on an internal project using datasets from the UK Companies House—which registers company information and makes it available to the public—to illustrate how firms can improve steps after aligning their data, particularly when the quality of information to work with is good from the start.

Fiona Browne, head of artificial intelligence (AI) at Datactics, says clients would typically use these datasets for downstream analysis like fraud detection.

“What we aim to show is that you can take in datasets and perform a proper matching, de-duplication, and so on, and you could potentially find things within these knowledge graphs that you may have missed without the proper [data] cleansing process at the start,” she says.

For the project, Datactics is applying its data quality engine to the construction of these entity groups.

“So it’s taking in data sources, which these graphs are then built from, ensuring the quality of those data sources—so applying the concepts of your profiling data quality, cleansing, matching, de-duplication, and so on—to feed into graph databases. For example, we often hear about graph databases like Neo4J and the visualization of the graphs, but a lot of the hard work is actually getting to that stage,” she says.

For example, Browne says the UK’s Companies House datasets are “incredibly messy,” even though they are government datasets.

“First, by tidying up and then standardizing the datasets, you’re able to then match it to another dataset—for example, persons of significant control. So, the persons of significant control dataset contains information on, maybe, directors who own shares within the company. And whenever you model that together in a knowledge graph, what this can show is maybe a director has a link to another company, which can be inferred through links to other companies and to other people,” she says.

It is this inferring knowledge that can be extracted using knowledge graphs. Browne says this process won’t be possible with messy data.

Alex Brown, CTO at Datactics, explains that the serious problems with those datasets are mostly due to human input errors, such as spelling.

All the information on a database like the one provided by the UK Companies House, comes directly from either a form filled out by hand, or typed in on the website whenever they’ve formed a company, or when they declare a change in share capital structure, for example.

“All of that information has to be entered manually. And consequently, as a result, there are lots of errors in it. For example, if I’m the owner of a company, I’m a director and my name is Alex Brown. There’s nothing preventing me, in another update, from putting my name as A. Brown,” he says. “[The process] inherently leads to a multitude of data quality problems. We see things like A. Brown being the same person as Alex Brown or the same person as Alexandra Brown, and they might be associated with three different companies, but they [also] might be the same person.”

This is where using knowledge graphs can be useful—to find links that were previously unknown due to discrepancies in the data. Brown says these links can then be used for risk analysis for the know-your-customer (KYC) onboarding process, or for phoenixing, a practice of insolvent companies setting up new companies under slightly different names, usually to evade creditors.

“I remember it was a year ago, maybe a bit longer than that, a big focus of the Financial Conduct Authority (FCA) was trying to take on these phoenixes. And a lot of it is hindered by the fact that people are able to manipulate the data and do what they like when they register the company without the ability to [do that] centrally and the banks have to then try and find it when they’re actually carrying out their own due diligence on providing facilities to those people. And that can be made much more complex if there isn’t a reliable central repository of data,” he adds.

The project Datactics is working on is still in the research-and-development stage. It also has yet to finalize how it will productize and monetize the product. But Brown says a key feature that will come out of it is that Datactics will have an off-the-shelf configuration that will allow customers to build knowledge graphs themselves using Datactics’ technology platform.

“Other areas we might productize is providing the knowledge graphs themselves, moving into publishing the data rather than the actual platform and technology to the model. We haven’t decided yet whether or not we’ll do that ” he says.

Further reading

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: http://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@waterstechnology.com

More on Data Management

New working group to create open framework for managing rising market data costs

Substantive Research is putting together a working group of market data-consuming firms with the aim of crafting quantitative metrics for market data cost avoidance.

Off-channel messaging (and regulators) still a massive headache for banks

Waters Wrap: Anthony wonders why US regulators are waging a war using fines, while European regulators have chosen a less draconian path.

Back to basics: Data management woes continue for the buy side

Data management platform Fencore helps investment managers resolve symptoms of not having a central data layer.

‘Feature, not a bug’: Bloomberg makes the case for Figi

Bloomberg created the Figi identifier, but ceded all its rights to the Object Management Group 10 years ago. Here, Bloomberg’s Richard Robinson and Steve Meizanis write to dispel what they believe to be misconceptions about Figi and the FDTA.

SS&C builds data mesh to unite acquired platforms

The vendor is using GenAI and APIs as part of the ongoing project.

Aussie asset managers struggle to meet ‘bank-like’ collateral, margin obligations

New margin and collateral requirements imposed by UMR and its regulator, Apra, are forcing buy-side firms to find tools to help.

Where have all the exchange platform providers gone?

The IMD Wrap: Running an exchange is a profitable business. The margins on market data sales alone can be staggering. And since every exchange needs a reliable and efficient exchange technology stack, Max asks why more vendors aren’t diving into this space.

Reading the bones: Citi, BNY, Morgan Stanley invest in AI, alt data, & private markets

Investment arms at large US banks are taken with emerging technologies such as generative AI, alternative and unstructured data, and private markets as they look to partner with, acquire, and invest in leading startups.