Waters Wrap: After CrowdStrike crisis, will anyone learn?

Several bank and hedge fund sources tell Anthony that while there’s plenty to be learned from the CrowdStrike bug, some will more than likely forget those lessons in a few weeks’ time.

In the intelligence community, there’s a prevailing thought that goes something like this: the public never hears about your successes, only your failures. Well, that’s also a strong sentiment felt by many who work in IT. A thousand unnoticed software patches and upgrades? Yeah, that’s your job. One major outage? Fire everyone.

But late nights and angry calls and emails are all part of the job.

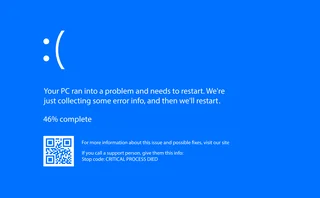

Now, I would assume that any subscriber of WatersTechnology is aware of the global outage that affected Microsoft Windows-based systems due to a faulty update by cybersecurity company CrowdStrike. The skinny of it was that it was not a hack or cyber attack—just a bug—but it knocked out about 8.5 million Windows machines starting on Friday, July 19. Some are saying it’s “the biggest IT failure that we’ve ever had.” If you want a more detailed analysis of what went down, I highly recommend reading this summary from the Pragmatic Engineer newsletter, or, if you’re a subscriber to Wall Street Journal, James Rundle (a WatersTechnology alum!) and his team’s excellent reporting over the last week.

We have become complacent—this is a wake-up call

CIO at tier-1 international bank

I was on holiday when the mayhem kicked off. When I got back, I spoke with a few well-connected bank IT consultants. Oddly, the impression I was getting—at least at the IT level—was that outages are part of their daily lives. Yes, this one was certainly much bigger than most, but it was handled relatively well in the capital markets (I can’t speak for airlines or healthcare providers).

After speaking with a few more sources, there are certainly lessons that can be learned, but will banks, asset managers, exchanges, and vendors put the time and resources into improving quality assurance (QA) and disaster recovery (DR)? More often than not, the answer is yes in the short term, and it will all be forgotten in the long run. But let’s take a look at what should be done if that weren’t the case.

‘All hands on deck’

“Myself and many others here were all hands on deck for most of Friday, although nothing bad really materialized,” says the chief information officer at a large US bank.

They say that IT determined “pretty quickly” that the bank’s infrastructure was not impacted because it doesn’t run CrowdStrike and doesn’t use the Azure cloud in-house. Next, they reached out to service providers, SaaS providers, key partners, and others to verify they were okay, which they were. But other bank sources had different experiences, and for them, it was a Friday that lasted deep into the night. But, ultimately, the disruption was largely contained and solved for in relatively short order. By Monday, it was business as usual.

So…no (real) harm, no foul—right?

“Everyone is leaning back, satisfied they can point the finger elsewhere,” says a second CIO at an international tier-1 bank. “However, it highlights the lack of protocols to upgrade software across large institutions in an increasingly digitally connected world.”

Most likely, everyone will forget this in two weeks and just move on, but it is really scary

Former hedge fund CTO

They say it would seem that this patch wasn’t verified and they wonder why it wasn’t rolled out in a more staggered manner, which are both widely known best practices.

“There used to be a time when before you released your code, you rehearsed things in your head and on paper, and you scripted your actions to ensure you had mitigated any operational risk to the business and your clients. It is the one risk that tech is tasked with managing and mitigating,” the second CIO says.

The first CIO echoed this sentiment, noting that as the industry moves to the cloud and leans in on AI, APIs, and open-source tools, finance firms now “live in an industry ecosystem,” so IT professionals have to make sure all inhabitants in the ecosystem are alright.

“CrowdStrike needs to improve its testing approach, where it tries its releases on a smaller population of endpoints before releasing them to the entire Earth. That should not be that difficult for them,” they say. “Hopefully, other vendors will pay attention to this event and adjust accordingly.”

That said, the first CIO offers this caveat: “The risks may be there in a cloud ecosystem, but the alternative—bringing it all back in-house—is worse!”

What did we learn?

A former buy-side chief technology officer says that, “most likely, everyone will forget this in two weeks and just move on, but it is really scary that there are so many major points of failure that can be introduced by so many players.”

It’s simple to say that IT professionals should provide deeper vetting and auditing of software updates, both internally and from vendors. But it is just so much easier—especially when it concerns a trusted vendor—to simply allow automatic updates. Putting in more manual intervention slows things down when everyone is trying to speed up. It requires more manpower and financial resources. And if you turn off automatic updates and you are slow to manually make those updates, it potentially opens the firm up to a cyber attack. Essentially, it’s a case of damned if you do, damned if you don’t.

The CTO says CrowdStrike is a vivid reminder that “QA still matters; that IT teams are slaves to their vendors; and that the world will probably just move on and forget. But the smartest firms will be asking themselves, what should we do to make the future better than the present?”

Everyone I spoke to is still perplexed as to how this rollout was just blasted out “to the entire Earth”, as the US CIO put it, rather than a staged/staggered/canary (use the term you like best) rollout. The Pragmatic Engineer newsletter I mentioned previously put it best: “CrowdStrike seems to have chosen the ‘YOLO’ option for this change, and it cost them dearly.”

Steve Moreton, global head of project management at consultancy CJC and who has nearly 30 years of experience in the IT and market data spaces, says the CrowdStrike issue was particularly notable because it highlighted a “defeat in victory” scenario. He points to a long-forgotten outage from 2012, when Commonwealth Bank released a patch that caused the bank’s entire network to become unusable for days.

“With IT support, there’s a clear observer effect, where the act of observing a situation inherently changes it. The reality is that an IT team following all the right procedures and best practices, something could still go wrong,” he says. “I’d compare this to a plane crash due to pilot error—even the most well-trained and experienced pilots can still make fatal mistakes. It’s a good philosophy for IT teams to keep this potential in mind during risk assessments. Again, as observers, we can affect the situation.”

Moreton notes that the Commonwealth Bank and CrowdStrike incidents have a parallel: one was a thought-to-be safe update that crashed the network because it was deployed to everyone, while the other was a small-but-flawed update, again sent out to everyone. He says that for the best IT outfits, strong development, QA processes, and testing are crucial. And using a staggered rollout—while slower—is best practice. But there’s one other important component to software development.

“Things like CrowdStrike’s update can still slip through the cracks. This is where disaster recovery comes into play—having a robust disaster recovery plan is essential to handle the unexpected,” Moreton says.

So, will the industry learn these lessons? Some might, but most sources spoken to for this column have their doubts. The markets are only becoming more interconnected, and more firms are moving more processes and platforms to the cloud. There is pressure to roll out new AI-driven tools. Open-source development is only becoming more popular. Interoperability and speed-to-market are imperative. So while CrowdStrike might give non-IT executives pause, barring some outside entity (meaning regulator) coming in and stopping them, they’ll be pressing play soon enough.

This means, says the CIO at the international bank, that as always, the responsibility will come down to the IT professionals to make the case for better software development and QA/DR processes.

“We have become complacent—this is a wake-up call,” says the CIO. “As technologists in a digitally interlinked world, we must remember our responsibility to manage operational risk. As Spider-Man taught us, with great power comes great responsibility.”

The image accompanying this column is “The Temptation of Saint Anthony” by Herri met de Bles, courtesy of The Met’s open-access program.

Further reading

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: http://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@waterstechnology.com

More on Data Management

New working group to create open framework for managing rising market data costs

Substantive Research is putting together a working group of market data-consuming firms with the aim of crafting quantitative metrics for market data cost avoidance.

Off-channel messaging (and regulators) still a massive headache for banks

Waters Wrap: Anthony wonders why US regulators are waging a war using fines, while European regulators have chosen a less draconian path.

Back to basics: Data management woes continue for the buy side

Data management platform Fencore helps investment managers resolve symptoms of not having a central data layer.

‘Feature, not a bug’: Bloomberg makes the case for Figi

Bloomberg created the Figi identifier, but ceded all its rights to the Object Management Group 10 years ago. Here, Bloomberg’s Richard Robinson and Steve Meizanis write to dispel what they believe to be misconceptions about Figi and the FDTA.

SS&C builds data mesh to unite acquired platforms

The vendor is using GenAI and APIs as part of the ongoing project.

Aussie asset managers struggle to meet ‘bank-like’ collateral, margin obligations

New margin and collateral requirements imposed by UMR and its regulator, Apra, are forcing buy-side firms to find tools to help.

Where have all the exchange platform providers gone?

The IMD Wrap: Running an exchange is a profitable business. The margins on market data sales alone can be staggering. And since every exchange needs a reliable and efficient exchange technology stack, Max asks why more vendors aren’t diving into this space.

Reading the bones: Citi, BNY, Morgan Stanley invest in AI, alt data, & private markets

Investment arms at large US banks are taken with emerging technologies such as generative AI, alternative and unstructured data, and private markets as they look to partner with, acquire, and invest in leading startups.